Dam101_unit5

Unit 5: Sequence Models

Sequence models are a broad category of models designed to handle data that comes in sequences. These models capture dependencies and patterns over time or other ordered structures.

Recurrent Neural Networks (RNNs)

What is RNN?

A Recurrent Neural Network (RNN) is a type of deep learning model specifically designed to handle sequential data by leveraging its inherent temporal dependencies. This makes RNNs particularly suitable for tasks such as natural language processing (NLP), speech recognition, and time-series prediction.

How do RNNs Work?

RNNs are composed of interconnected layers, including input, output, and hidden layers. The key feature of RNNs is their ability to maintain a form of memory by using feedback loops. This enables them to consider previous inputs while processing current ones, thereby allowing them to exhibit dynamic temporal behavior.

Hidden Layer Functionality in RNNs

RNNs operate by sequentially passing input data through hidden layers. These hidden layers possess a unique recurrent capability: they can retain and utilize past inputs to inform future predictions, akin to a short-term memory mechanism. This process involves using both the current input and the stored memory from previous steps to predict subsequent sequences.

For instance, imagine the input sequence “Rose is.” To predict “red,” the RNN starts by processing “Rose,” storing this information in its hidden layer. As it encounters “is,” it recalls the context “Rose” from its memory and interprets the complete sequence “Rose is,” enabling it to predict “red” with greater accuracy. This capability makes RNNs highly effective in tasks such as speech recognition, machine translation, and other forms of language modeling.

Training RNNs

Training RNNs involves optimizing parameters to minimize prediction errors. Backpropagation through time (BPTT) is a fundamental technique used to calculate gradients over sequential data, enabling adjustments to weights and biases across time steps.

Types of RNNs

RNN architectures vary based on the nature of input-output relationships:

One-to-many: Generates multiple outputs from a single input, useful in applications like image captioning.

Many-to-many: Processes multiple inputs to produce multiple outputs, such as language translation.

Many-to-one: Maps multiple inputs to a single output, beneficial for sentiment analysis or summarization tasks.

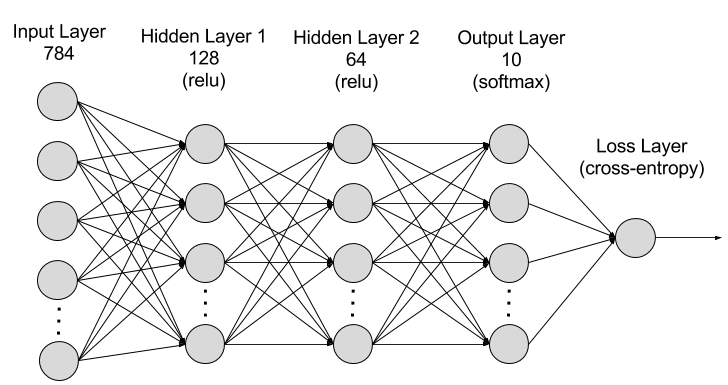

Comparisons with Other Neural Networks

RNNs vs. Feed-forward Neural Networks: Unlike feed-forward networks that process inputs independently, RNNs maintain state or memory, making them suitable for sequential data.

RNNs vs. Convolutional Neural Networks (CNNs): While CNNs excel at spatial data processing, RNNs are designed to capture temporal dependencies in sequential data.

Variants of RNN Architecture

Several RNN variants have been developed to address specific challenges and improve performance:

Bidirectional RNNs (BRNNs): Enhance context understanding by processing data in both forward and backward directions.

Long Short-Term Memory (LSTM): Introduces memory cells to better retain and utilize information over longer sequences.

Gated Recurrent Units (GRUs): Simplify the architecture by combining the forget and input gates of LSTMs.

Limitations of RNNs

Despite their advantages, RNNs have several limitations:

Gradient Issues: They are prone to gradient vanishing or exploding during training, affecting learning stability and performance.

Computational Inefficiency: Sequential processing limits their ability to efficiently handle large datasets and complex tasks.

What are Word Embeddings?

Word embeddings are vector representations of words in a multi-dimensional space, where each dimension captures a different aspect of the word’s meaning. These embeddings encode semantic relationships and similarities between words based on their context in a corpus of text.

Importance of Word Embeddings

Word embeddings have revolutionized natural language processing (NLP) by enabling machines to understand and process language more effectively than traditional methods like one-hot encoding. They are integral to various NLP tasks such as text classification, sentiment analysis, machine translation, and more.

How Word Embeddings Work

Word embeddings are trained by exposing models to large text datasets, adjusting vector representations based on the context in which words appear. This process captures semantic meaning and relationships between words, enhancing the understanding of language nuances.

Popular Word Embedding Models

Word2Vec and GloVe are two popular models for training word embeddings. Word2Vec uses neural networks to predict surrounding words based on a target word, while GloVe leverages global statistics of word co-occurrence across the entire corpus.

Applications of Word Embeddings

Word embeddings are used in diverse NLP applications:

Text Classification: Enhances features for tasks like sentiment analysis and topic categorization.

Named Entity Recognition (NER): Improves entity classification by understanding word context.

Machine Translation: Facilitates understanding of semantic relationships between words in different languages.

Information Retrieval: Enhances search engines by improving document relevance matching.

Question Answering: Aids in understanding contextual cues for accurate responses.

Text Generation: Contributes to generating coherent and contextually relevant text outputs.

Similarity and Analogy Tasks: Measures similarity and solves analogies between words.

Pre-training Models: Forms the basis for advanced language models like BERT and GPT.

Creating Word Embeddings

The process of creating word embeddings involves training models on large text corpora, tokenizing text, removing noise, and using context windows to predict or understand word relationships. Embeddings are learned to position words in a continuous vector space based on their semantic meanings and contexts.

Word Embedding Techniques

Two main techniques for creating word embeddings are:

- Frequency-based Embeddings: Utilize word occurrence statistics like TF-IDF.

- Prediction-based Embeddings: Train models to predict context words or surrounding words.

Word2Vec and GloVe Models

Word2Vec: Uses CBOW and Skip-gram models to predict context from target words, known for handling vast text data efficiently.

GloVe: Focuses on global word co-occurrence statistics, optimizing embeddings using matrix factorization techniques.

Beyond Word2Vec and GloVe

Advanced models like FastText, BERT, and GPT incorporate subword embeddings, attention mechanisms, and transformers to improve language representation. These models excel in handling polysemy, rare words, and capturing complex semantic relationships.

Conclusion

Word embeddings are essential for understanding and representing language in NLP tasks, providing nuanced insights into word meanings and relationships. They continue to drive innovations in machine learning and AI by enabling more accurate and context-aware language processing.